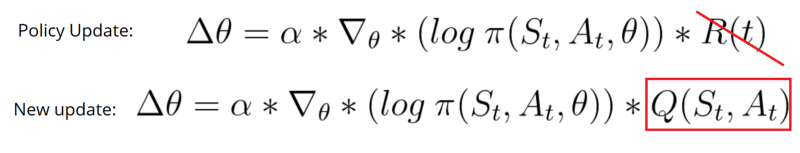

The Actor Critic model is a better score function. Instead of waiting until the end of the episode as we do in Monte Carlo REINFORCE, we make an update at each step (TD Learning).

Because we do an update at each time step, we can’t use the total rewards R(t). Instead, we need to train a Critic model that approximates the value function (remember that value function calculates what is the maximum expected future reward given a state and an action). This value function replaces the reward function in policy gradient that calculates the rewards only at the end of the episode.

How Actor Critic works

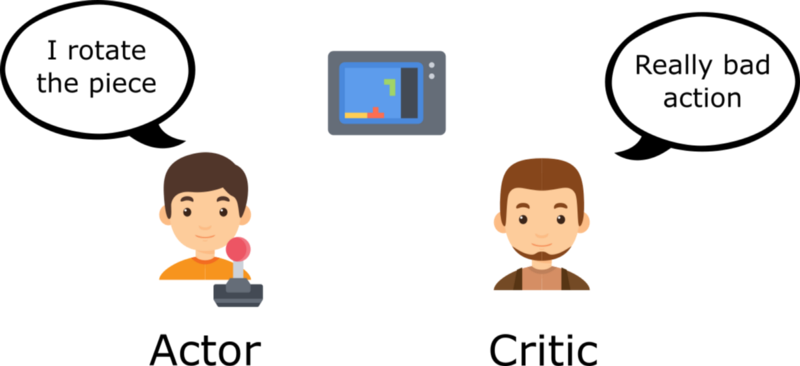

Imagine you play a video game with a friend that provides you some feedback. You’re the Actor and your friend is the Critic.

At the beginning, you don’t know how to play, so you try some action randomly. The Critic observes your action and provides feedback.

Learning from this feedback, you’ll update your policy and be better at playing that game.

On the other hand, your friend (Critic) will also update their own way to provide feedback so it can be better next time.

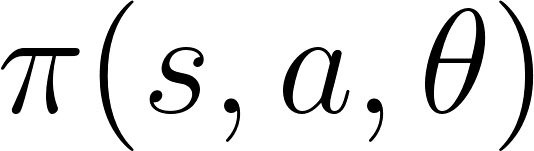

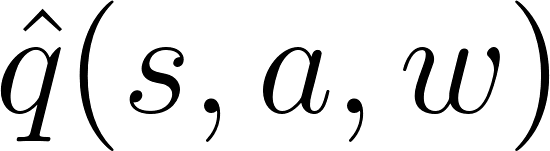

As we can see, the idea of Actor Critic is to have two neural networks. We estimate both:

ACTOR : A policy function, controls how our agent acts.

CRITIC : A value function, measures how good these actions are.

Both run in parallel.

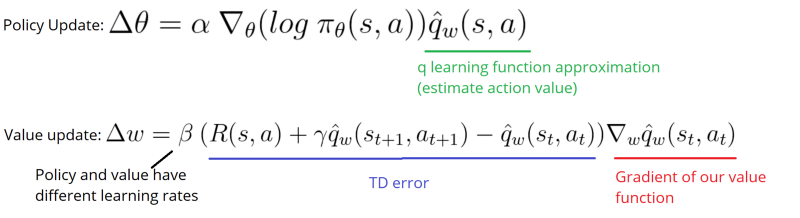

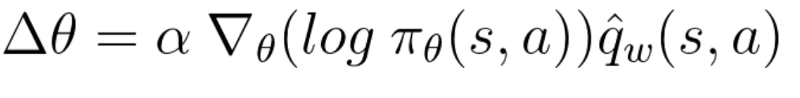

Because we have two models (Actor and Critic) that must be trained, it means that we have two set of weights (? for our action and w for our Critic) that must be optimized separately:

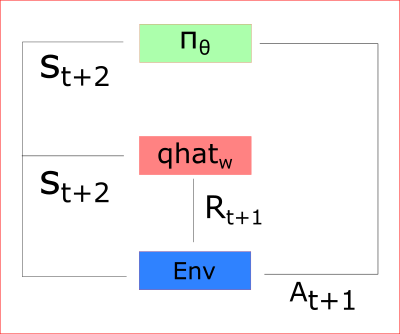

At each time-step t, we take the current state (St) from the environment and pass it as an input through our Actor and our Critic.

Our Policy takes the state, outputs an action (At), and receives a new state (St+1) and a reward (Rt+1).

Thanks to that:

Thanks to its updated parameters, the Actor produces the next action to take at At+1 given the new state St+1. The Critic then updates its value parameters:

![]()

As we saw in the article about improvements in Deep Q Learning, value-based methods have high variability.

To reduce this problem, we spoke about using the advantage function instead of the value function.

The advantage function is defined like this:

This function will tell us the improvement compared to the average the action taken at that state is. In other words, this function calculates the extra reward I get if I take this action. The extra reward is that beyond the expected value of that state.

If A(s,a) > 0: our gradient is pushed in that direction.

If A(s,a) < 0 (our action does worse than the average value of that state) our gradient is pushed in the opposite direction.

The problem of implementing this advantage function is that is requires two value functions — Q(s,a) and V(s). Fortunately, we can use the TD error as a good estimator of the advantage function.

We have two different strategies to implement an Actor Critic agent:

Because of that we will work with A2C and not A3C. If you want to see a complete implementation of A3C, check out the excellent Arthur Juliani’s A3C article and Doom implementation.

In A3C, we don’t use experience replay as this requires lot of memory. Instead, we asynchronously execute different agents in parallel on multiple instances of the environment. Each worker (copy of the network) will update the global network asynchronously.

On the other hand, the only difference in A2C is that we synchronously update the global network. We wait until all workers have finished their training and calculated their gradients to average them, to update our global network.

The problem of A3C is explained in this awesome article. Because of the asynchronous nature of A3C, some workers (copies of the Agent) will be playing with older version of the parameters. Thus the aggregating update will not be optimal.

That’s why A2C waits for each actor to finish their segment of experience before updating the global parameters. Then, we restart a new segment of experience with all parallel actors having the same new parameters.

As a consequence, the training will be more cohesive and faster.

1) Implement a Java-based Actor-Critic Algorithm () function with specific interface and parameters;

2) For iMADE implementation, implement a multiagent based Actor-Critic Algorithm application.

In order to ensure a well defined multiagent system (on top of JADE platform), the application must at least consists of two (or more than 2 iMADE agents). E,g. Data-miner agent to get the data from the iMADE server, Actor-Critic Algorithm agent to perform the Actor-Critic Algorithm operations.

Refer to the website:https://keras.io/examples/rl/actor_critic_cartpole/